When Corey and I started The Duckbill Group in 2019, I sat down with Corey and asked him to braindump everything he looks at when assessing a client’s AWS spend in the first pass.

I then came up with a simple framework that’s easy to memorize and still serves us today.

Step 1: Turn that shit off.

Whatever you’re not using, get rid of it. Really not much more to say there.

Step 2: Store less data.

Keeping data around is expensive as hell. Maybe store less of it. If you must store it, store it in cheaper locations.

Today, we think about this as a holistic data lifecycle and it’s one of the first things we seek to understand with a customer.

Step 3: Move less data.

Bandwidth is expensive in the cloud. The more you move data around, the more you’re paying.

But this also comes down to things like compression too: move the same data, but compress it before transit it so the size isn’t as large.

Step 4: Cloud-ify your workloads.

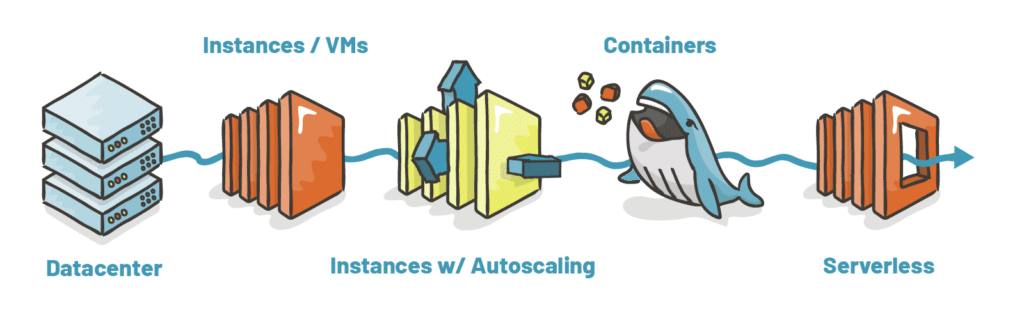

Corey coined this the “cloudiness continuum.” Be less like a datacenter and as serverless as you can. In generic terms, this is about lowering execution time of compute.

Step 5: Pre-pay for resources.

Reserved Instances, Savings Plans, private pricing contracts. These are the last things you do, since these lock in architectural decisions.

Step 6: Repeat

And lastly, repeat the process often. Things change regularly in customer environments, so it’s always worth revisiting past discussions.

You’ll note that this framework isn’t an exhaustive list of things to check. That’s because cloud costs–and cloud architecture–can’t be reduced to simple lists. These are complex problems and not every solution applies to every situation.

Human expertise is necessary.